The Challenge

The organization needed a comprehensive evaluation infrastructure to measure program effectiveness, demonstrate impact to funders, and drive The organization needed to strengthen its evaluation infrastructure to better measure program effectiveness, demonstrate impact to funders, and support data-driven decision making across 30+ programs serving diverse populations. While evaluation processes existed, they lacked consistency across different program formats, making it difficult to compare results and identify patterns. The organization needed standardized assessment approaches, more systematic data tracking, and clearer frameworks for measuring learner outcomes and demonstrating ROI to stakeholders.

My Role

I served as lead evaluator responsible for strengthening the organization’s evaluation systems and establishing data-driven measurement practices:

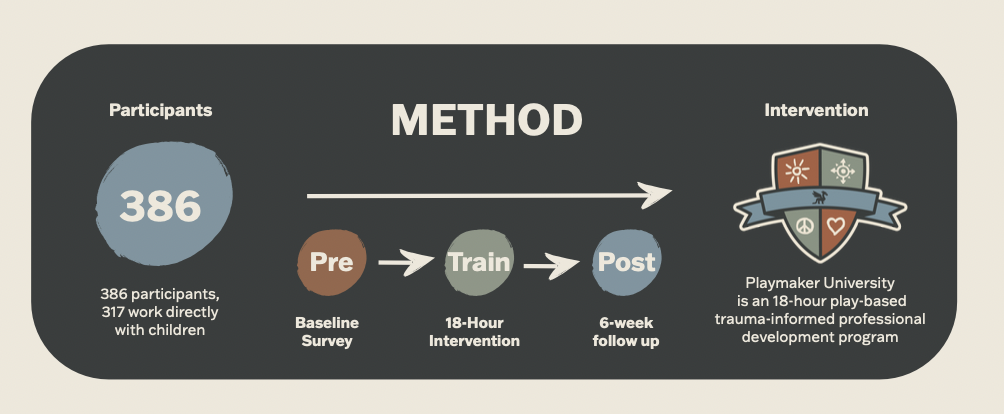

- Evaluation Framework Design: Led initiative to develop and implement a comprehensive pre/post evaluation methodology with a 6-week follow-up protocol to measure immediate outcomes and sustained behavior change.

- Instrument Selection: Researched and advocated for validated assessment tools based on literature review, successfully pushing for adoption of Maslach Burnout Inventory and Student-Teacher Relationship Scale while collaborating with subject matter experts on additional measures, including Flourishing Scale.

- Assessment Development: Created evaluation surveys and protocols, working with subject matter experts to refine instruments for organizational context while maintaining research validity.

- Data Management: Established workflows for loading cohorts into Klaviyo and SurveyMonkey, downloading and cleaning data, and preparing datasets for analysis.

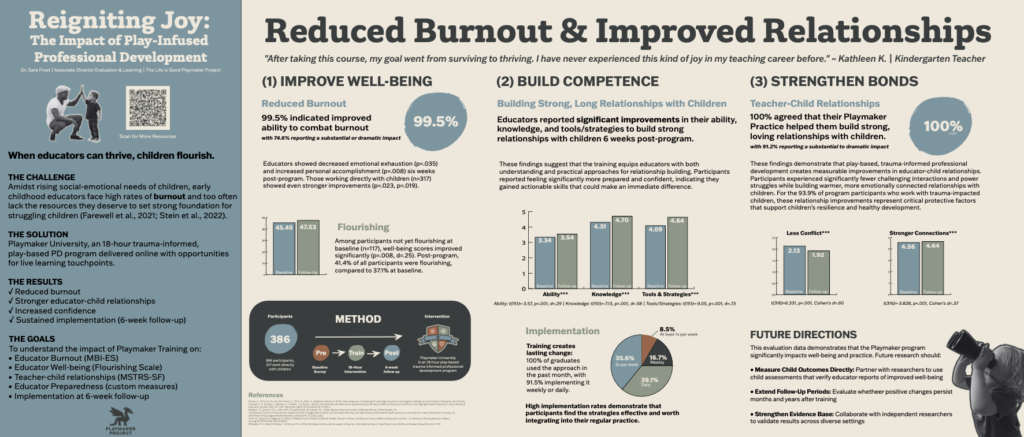

- Statistical Analysis: Conducted quantitative analysis using SPSS, including paired t-tests, effect size calculations, and descriptive statistics to demonstrate program impact.

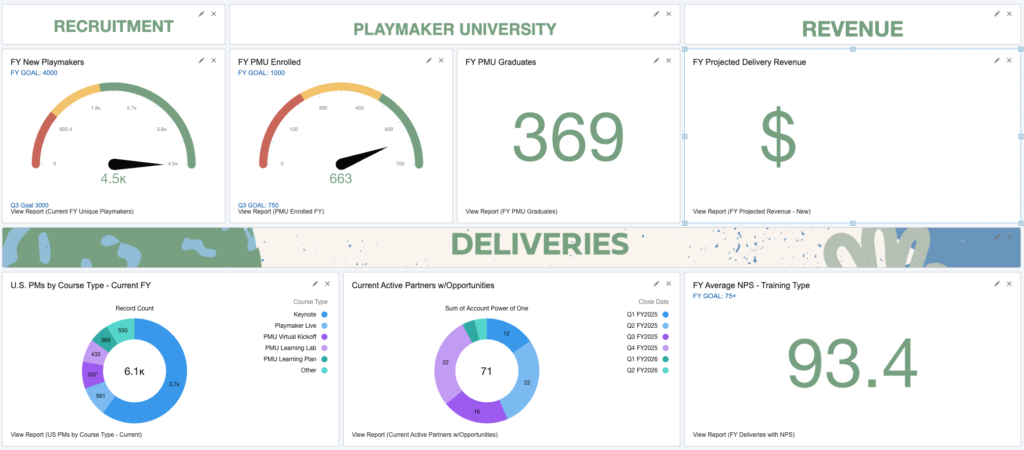

- Reporting & Dashboards: Designed KPI dashboards and automated reports for program monitoring and stakeholder communication.

- Standardization: Improved consistency across evaluation processes by aligning training surveys across 30+ program formats to enable cross-program comparison and pattern identification.

Approach

Applied rigorous research methodology and systems thinking:

Research & Planning:

Explored academic literature on educator wellbeing, professional competence, and relationship quality to identify validated instruments and evidence-based measurement approaches. Assessed organizational evaluation needs and developed an evaluation strategy aligned with program goals and funder requirements.

Framework Development:

Created a comprehensive pre/post evaluation framework defining what to measure, when to measure it, and how to analyze results. Established organizational evaluation standards applicable across all 30+ program formats. Designed data collection workflows balancing research rigor with participant burden.

Assessment Refinement:

Tested evaluation instruments with the alumni group and initial cohorts. Refined survey questions based on participant feedback and response rates. Adjusted data collection timing to optimize completion rates. Validated data quality and analysis procedures through iterative testing.

Data Management Systems:

Established workflows using the existing SurveyMonkey platform for data collection and Salesforce for tracking. Developed processes for managing cohort enrollment, data downloading, cleaning, and preparation for statistical analysis in SPSS. Created dashboards and reports visualizing key metrics for stakeholders.

Scale & Sustainability:

Trained program staff on evaluation administration and data interpretation. Documented standard operating procedures for evaluation processes to support IACET accreditation and established comprehensive reporting cycles, including post-training reports, quarterly guide reports, mid-year reviews, and annual reviews, and created templates enabling consistent evaluation across all programs.

Deliverables

Evaluation Infrastructure:

- Comprehensive pre/post evaluation framework with 6-week follow-up protocol

- Standardized assessment instruments using validated research-backed scales (Maslach Burnout Inventory, Student-Teacher Relationship Scale, Flourishing Scale)

- Data collection workflows and procedures

- Standard operating procedures for evaluation processes

- Quality assurance protocols

Data Management & Analysis:

- Workflows for cohort enrollment and survey distribution via SurveyMonkey

- Data cleaning and preparation procedures for statistical analysis

- SPSS analysis procedures, including paired t-tests and effect size calculations

- KPI dashboards and high-impact reports for stakeholder communication

- Custom Salesforce reports for program monitoring

Reporting Outputs:

- Post-training evaluation reports

- Quarterly guide reports

- Mid-year reviews for organizational leadership

- Annual impact summaries for grant applications and funders

- Conference presentations and research posters

- Data visualizations for board and stakeholder reporting

Organizational Standards:

- Standardized evaluation approach across 30+ program formats

- Funder reporting templates

- Documentation supporting IACET accreditation requirements

Impact

Organizational Intelligence:

- Established evidence base demonstrating program effectiveness across 30+ program formats

- Enabled data-driven decision-making through consistent measurement and reporting

- Provided credible metrics for board reporting and strategic planning

- Created a foundation for continuous improvement based on learner feedback and outcome data

Program Improvement:

- Informed curriculum and program revisions based on assessment results and participant feedback

- Identified patterns in learner engagement and completion through data analysis

- Provided insights on program timing and delivery based on completion data

- Enabled evidence-based conversations about program effectiveness

Funding & Growth:

- Provided compelling evidence supporting grant applications and renewals

- Demonstrated measurable outcomes to existing and prospective funders

- Enhanced organizational credibility through rigorous evaluation methodology

- Enabled expansion to new audiences backed by proven effectiveness data

Research Contributions:

- Generated findings on educator well-being and professional development effectiveness

- Created conference presentations and research posters sharing evaluation results

- Contributed to the organization’s reputation as an evidence-based

- Developed an evaluation approach that is now standardized across 30+ program formats, including a flagship program serving 2,500+ learners